As part of my weekly analysis of the campaign race between Obama and Romney, I took a look at the sentiment of the tweets mentioning both of them. That report will be up a bit later on today, but I wanted to share something interesting I’ve found while working on the report.

I did a manual sentiment analysis of the tweets to determine whether they are pro-candidate (or at least neutral) or anti-candidate. The manual process consists of selecting a statistically valid sample at random and then reading each tweet to score it. It’s slow, painstaking, but it produces the best results because I, as a human and one that is versed in current events, understand the text, the context, and can usually guess the writer’s intent.

But there are also automated tools for doing sentiment analysis, and I use these tools too. These tools look at the text, do a simplistic parsing of the tweet, and assign a score based upon the words that are used: “Hate” gets a negative score while “Love” gets a positive score. You might think that these tools are very crude, and you’d be right. But that doesn’t mean they don’t deliver some useful insight.

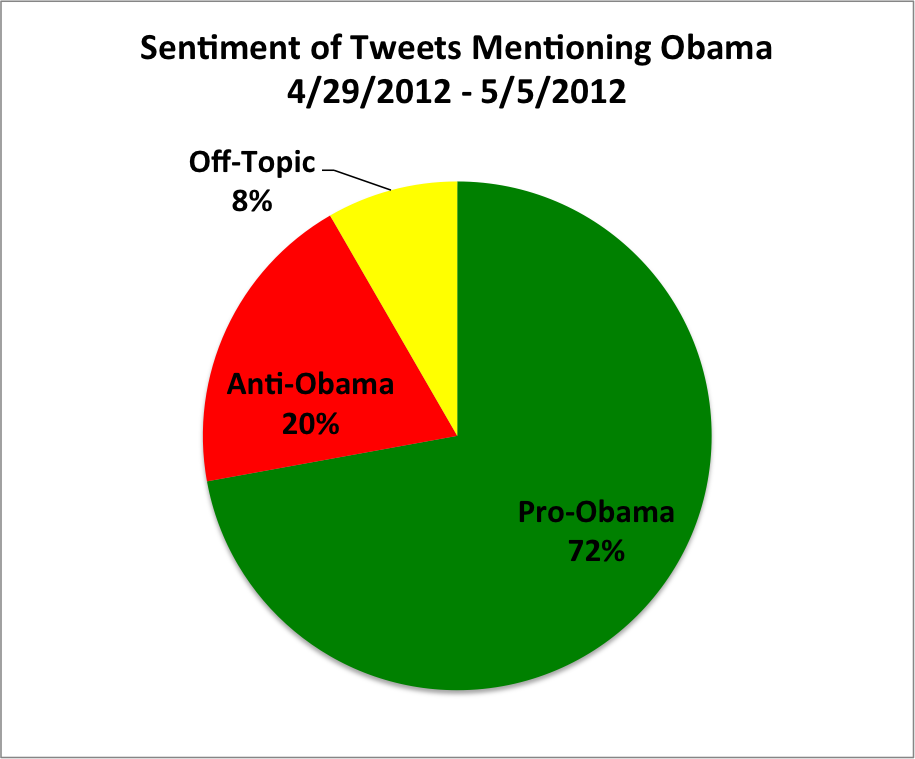

Take, for example, last week’s (4/29-5/5/2012) tweets mentioning Obama. Here’s how I scored them manually:

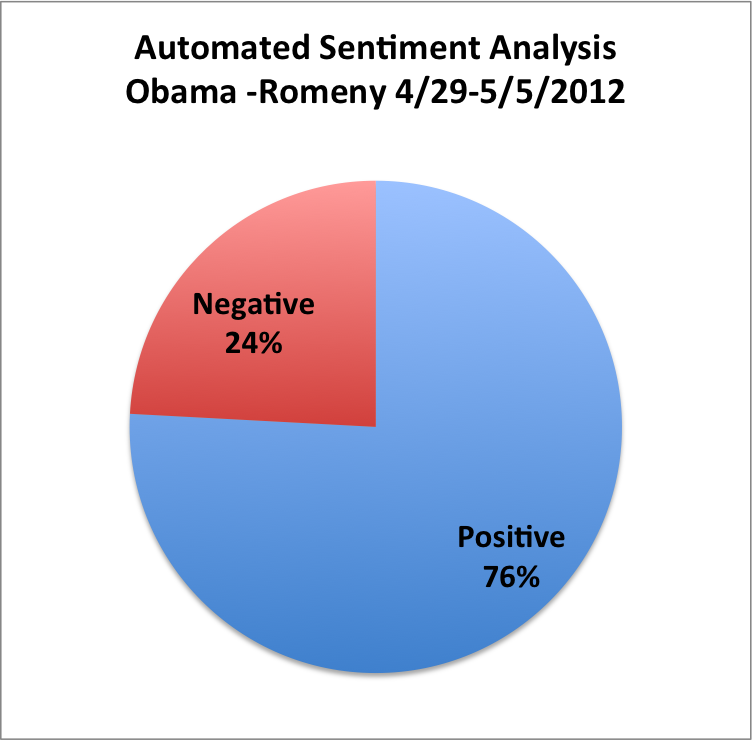

The margin of error is +/- 5% at a 95% confidence level (which is pretty much the gold standard for surveys). How did the automated tool do:

Very close! You’ll note there’s no “off topic” or “neutral” category in this chart. That’s because a huge majority of tweets end up with a neutral score, and that’s really a failure of the scoring system rather than a real indication of indifference. Still, both the 76% and 24% are within the margin of error of the manual survey, so we can say they produced the same results. Fantastic! (Obama -Romney means I just looked at tweets that mentioned Obama but did not mention Romney — I didn’t want the score to be confused by negative-about-Romney tweets that mentioned Obama).

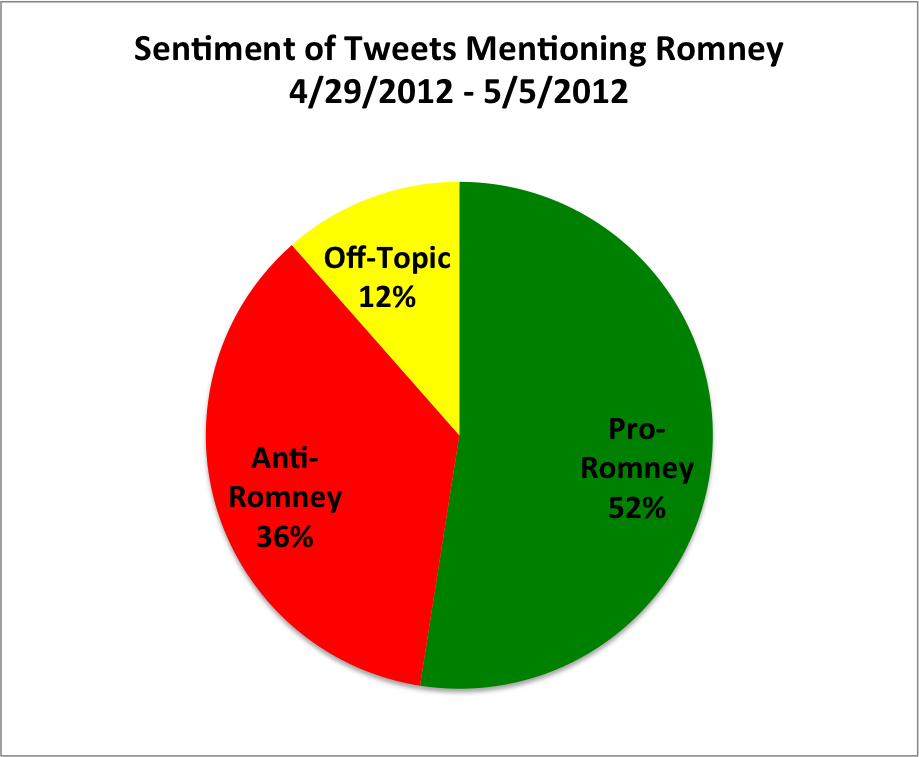

But does this always work? Let’s look at Romney’s sentiment as scored manually:

Mitt didn’t have that great of a Twitter week, although a lot of the negatives were other republicans not happy about losing Santorum and Newt, or people still supporting Ron Paul. So a lot of the negatives are probably not supporters of Obama.

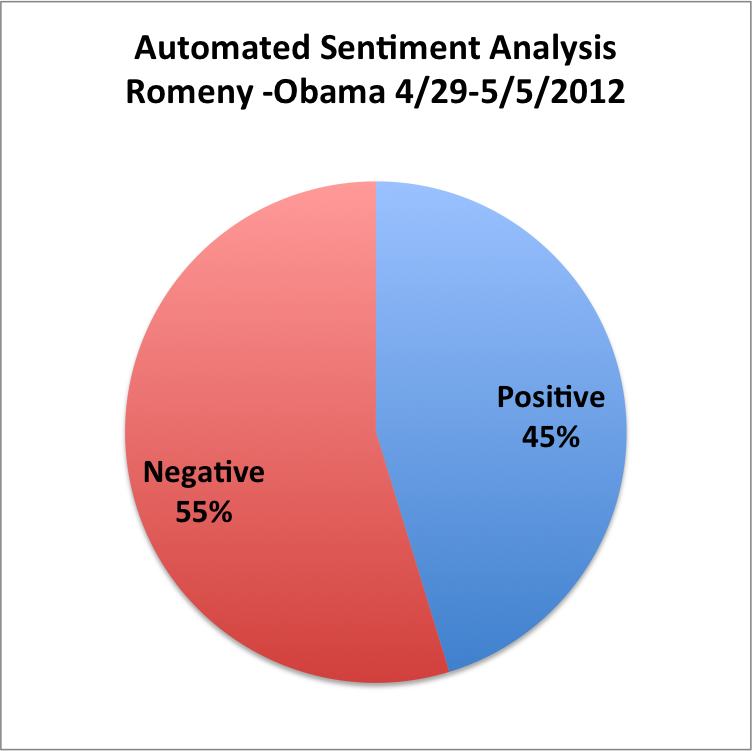

Let’s compare this to the automated sentiment analysis:

Quite different! Why? Mostly because although I tried to filter out posts that were really about Obama, there’s a lot of posts that snuck through without mentioning him. #Julia and other anti-Obama hashtags were a common source.

The lesson here is that for automated tools you need to very carefully scrub the tweets being examined to make sure they are really on the topic you are interested in. Or, put another way, because so many Romney supporters like to talk about how bad the President is instead of how great Romney is, it skews the automated analysis.

I’ll be trying to work in a better algorithm for analyzing sentiment, and I know others have made great strides in that direction as well. But the key thing to remember is that automated sentiment analysis works best when the tweets are talking about the subject you’re interested in, and poorly when people mention your subject in passing while talking about something else. When you see people give a score to a collection of tweets, you should generally assume that they are using automated tools rather than scoring them by hand. And so you should keep in mind the limitations of those tools.